[Note: this piece is cross-published as a guest entry on the LangChain blog.]

There's something of a structural irony in the fact that building context-aware LLM applications

typically begins with a systematic process of decontextualization, wherein

- source text is divided into more or less arbitrarily-sized pieces before

- each piece undergoes a vector embedding process designed to comprehend context, to capture information inherent in relations between pieces of text.

Not altogether unlike the way human readers interact with natural language, AI applications that rely

on Retrieval Augmented Generation (RAG) must balance the analytic precision of drawing inferences from

short sequences of characters (what your English teacher would call "close reading") against the

comprehension of context-bound structures of meaning that emerge more or less continuously as those

sequences increase in length (what your particularly cool English teacher would call "distant reading").

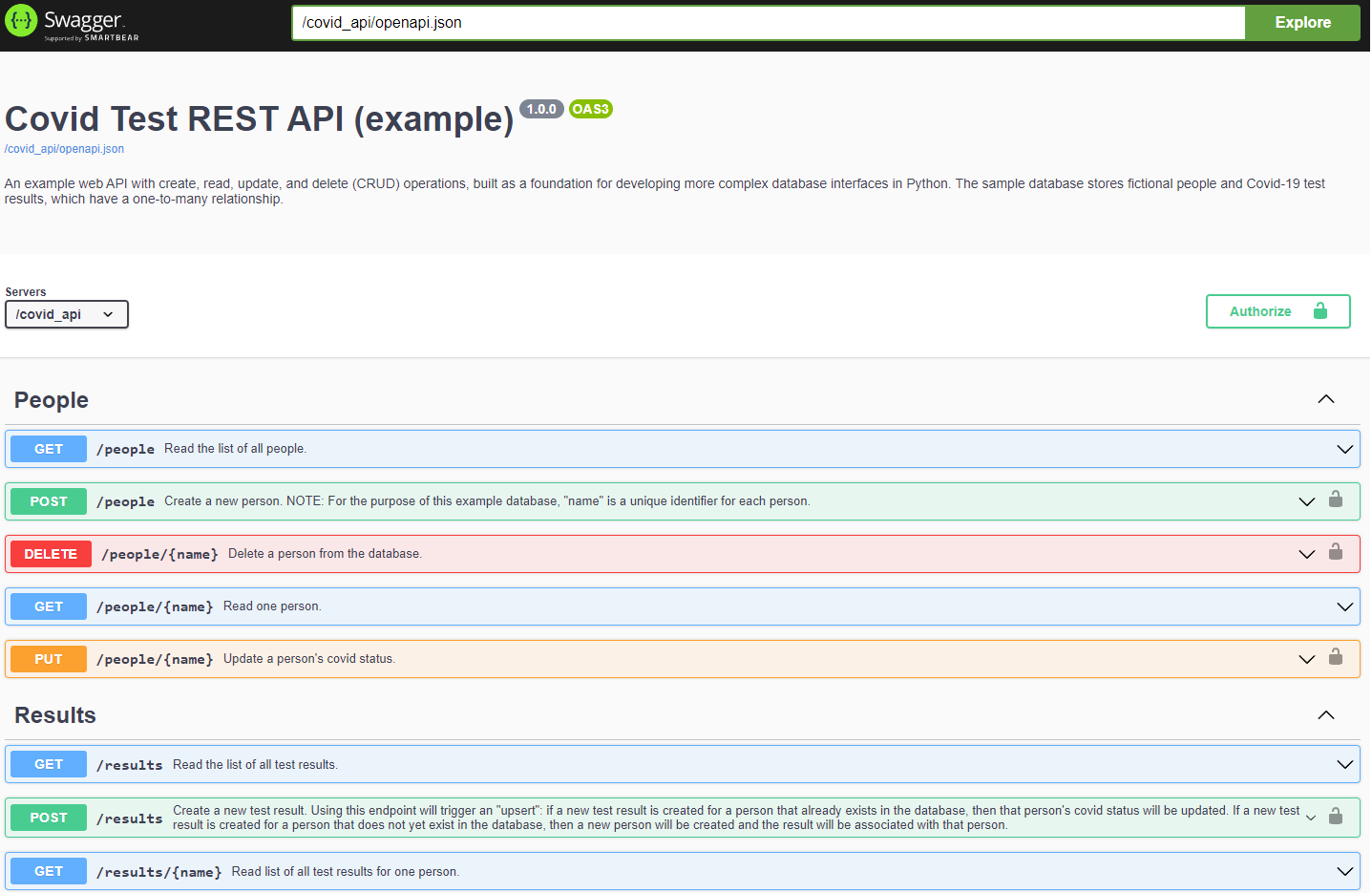

This blog explores a novel approach to striking this balance with HTML content, leveraging important

contextual information inherent to document structures that is typically lost when LLM applications are

built over web-scraped data or other HTML sources. In particular, we will test some methods of combining

Self-querying with LangChain's new

HTML Header Text Splitter, a "structure-aware" chunker that splits text at the element level

and adds metadata for each chunk based on header text.

Click to read on...

The project of using LLM dialogue agents to simulate philosophers conversing with each other

is in one sense just a game—a way of playing with language dolls that are, if not lifelike, rendered in amazing detail.

But the implications are far-reaching, holding in relief the complex relationship between these static models of language and the more or less

malleable systems of knowledge that they can contain and interact with.

Like the previous project of using LLM agents to simulate the discourse of a university English class,

these experiments are designed in the spirit of exploration. I use LangChain,

powered by GPT-4, first to build agents to role-play as specific philosophers from throughout history (with information extracted from the Stanford Encyclopedia of Philosophy), then to

simulate a series of dialogues in which one agent tries to convince another to adopt a philosophical position that

contradicts its own.

Some open questions: To what extent are these interactions deterministic? What makes an agent more likely to convince or to be convinced?

What does the relative tractability of an agent indicate about the philosophical position it represents?

What does that tractability indicate about how the LLM itself reflects the underlying system of knowledge?

Click to read on...

A quick project to demonstrate how to build structured information about forms of bias in unstructured webpage content. The objectives, scoring criteria, and schema are arbitrarily defined, so this can serve as an open-ended demonstration of how to use LangChain with GPT-4 to build structured, evaluative information from language data loaded from URLs.

Click to read on...

This entry is a follow-up/supplement to the previous entry, "GPT-4 Goes to College." Read that one first for context and the rest of the code.

Previously, I used LangChain to create an agent to play the role of a student in a university English class. Given a set of "tools" (primary source texts along with a course syllabus and lecture notes), the agent was prompted to compose responses to its own previous responses to a writing assignment, simulating the process of learning through participation in intellectual exchange. This allowed for a glimpse of some potential vectors through which bias can propagate in reasoning chains involving autonomous interactions across multiple internal and external knowledge systems.

Such processes (while relatively benign in this case) pose a unique set of challenges for traceability and evaluation versus more familiar—and more deterministic—processes related to biases inherent in LLM models themselves, i.e. that emerge during training or that exist in natural language data used for training.

Here, I test the potential for GPT-4 (or other LLMs) to be used reflexively to trace and to evaluate forms of bias that emerge within the ReAct framework.

Click to read on...